Okay... BIG qualifier here: this post pertains to a 2008 Mac Pro running Ubuntu 18.04.5 LTS. If you're expecting some sort of cutting edge hardware review, this isn't it. However, I fetishize old computers and my 2008 Mac Pro holds well enough against contemporary computers that it can handle just about anything I throw at it as a web-developer in 2021. This computer has been an ongoing project, and I enjoy tweaking it here and there to see just how well it can keep up. I recently purchased some PCIe cards (adapters, really) to run hard-disks on, and in this post I'd like to casually review which card, which drive, and which connection gets me the best performance. I'll also discuss what kinds of tools on Ubuntu/Linux help me assess this.

Background

Now, technically speaking, most of the information in this review could easily be found in product descriptions. Product descriptions, however, aren't always what they're cut out to be. I really prefer not to take it for granted that the maximum throughput listed on a product is the same as what you'll get on your own computer. It seems like there are enough variables in data throughput (reading and writing hard disks) that use-case is inevitably a factor. If my testing turns out to be redundant, that's fine. At least I'll have the satisfaction of knowing I can take manufacturers at their word.

My Setup - The 2008 Mac Pro

About three years ago I bought a banged up Mac Pro off of eBay. By banged up, I mean the case was (and still is) deformed. It was a risky buy, yes, but I figured it would be a fun project to work on if it didn't boot correctly. In fact, that was the case: it didn't boot correctly--after a little tinkering it seemed all it needed was a new PRAM battery and a PRAM/SMC reset. I dropped in the hard-drive from another Mac and I was up and running (although, it took a bit longer to finess a Xubuntu install).

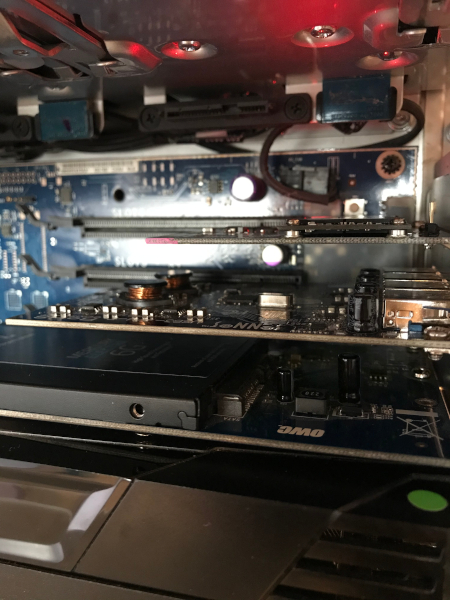

With two four-core 3Ghz processors, four drive bays and four PCIe2.0 slots, I figured I could drop a few bucks on the beast and turn it into a proper work-computer. I grabbed a GeForce GTX 670 off of eBay, upgraded to 48Gb of RAM (one word: virtualization), and invested in a slew of PCIe cards: a USB 3.2 card; an mSATA adapter (for mSATA SSDs); and a 2.5" SATA SSD Host Adapter. All said, I dropped some decent cash into the computer--all the more reason to make sure it gets optimized.

Despite all this investment, for the longest time I ran a traditional hard disk. It was only recently (in a desperate attempt to find write-offs for my business) that I finally bothered to get a 2.5" SSD for the boot disk. Ubuntu distros always booted faster than OSX, but after moving to the SSD, the difference in boot time was like night and day! "That's it!"--I thought, "I gotta take the time to make sure this baby is optimized." Hence this blog post!

Off to the Races

So here's what I'm looking to sum up: I currently run a 2.5" solid-state from the SATA SSD Host Adapter--that's a PCIe card slotted to the x16 PCIe2.0 lane. This SSD is my system disk (Ubuntu 18). Documentation says that the x16 slot is where I'll get the best performance from the PCIe card; but I've long taken it for granted that this is the best place to have the SSD in the first place. I want to test if this is really true, and I'd like to test the performance against two other disk types: an mSATA SSD I recently purchased alongside a PCIe mSATA adapter, as well as a standard mechanical drive.

Let's start by looking real quick where else I might locate my 2.5" SSD:

You can pretty easily see my options: the top two PCIe slots are x4 lanes; below them is the x16 lane; above the PCIe slots is a SATA connector for one of the drive bays. The manufacturer says the x4 lanes will result in lower throughput; that's probably not debatable but I've never tested the difference. I'm also curious to know how the x4 and x16 lanes compare to the SATA drive bay. In order to compare the three I can do some simple tests from the command line with hdparm.

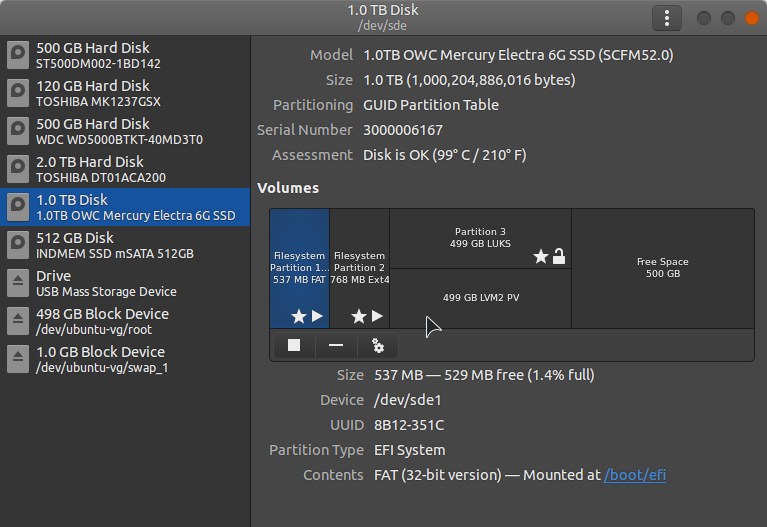

Before we get to the tests, though, we'll want to take note of the path to the drive. There are lots of ways to do this on the commandline (i.e., dd or lsblck), but I personally like the readability of the Disks application via GNOME. Let's open it up and see if we can find the path to my startup-disk:

In my case, my startup disk is a 1TB OWC SSD. If you select the disk from the left-hand sidebar you should see its path listed right under the device name in the title-bar of the window--in this particular case, /dev/sde. When I run my tests I'll have to be careful with this path; depending on where I stick this drive, or other drives, the path is likely to change. Generally speaking, though, the path will always be /dev/sd[some letter, a-z]. Now that we know how to find the disk path, let's checkout hdparm.

Testing Performance

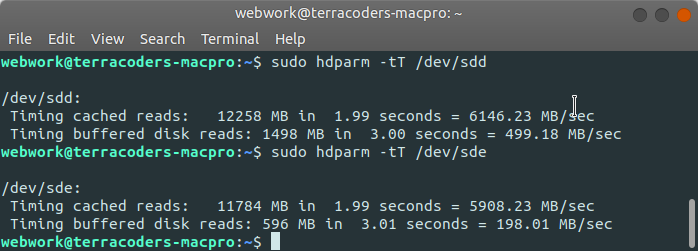

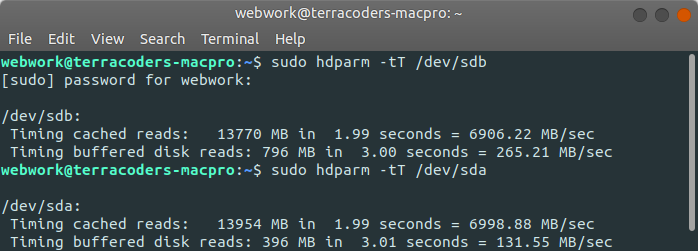

Let's get started with testing. I want to first get a feel for how the 2.5" SSD performs in the x16 PCIe slot. For this test, my SSD is actually under the /dev/sdd path. I'll use the mSATA SSD located in the x4 slot for comparison--the path for that drive is /dev/sde. I can use sudo hdparm -tT /path/to/device to assess read speed on the drives. The -t option will give me the buffered disk read speed; the uppercase T option will get me the cached read time. The buffered speed seems to be the speed that the manufacturer is listing in their specs as an "average read speed"; the cached speed should rightfully be faster. Let's see what I get:

The buffered read speed for the 2.5" SSD (/dev/sdd) in the x16 slot isn't too far from the manufacturer's average of 513 MB/sec for sequential read. Ideally I'd repeat the test a few times to get an average, but I'm close enough I don't really feel obliged. Interestingly, the mSATA disk (dev/sde) performs comparably poorer in the 4x slot. Let's see what happens if I swap the locations of both of these drives: move the 2.5" SSD to the x4 slot and move the mSATA drive to the x16 slot:

Don't be confused by the path names; the first test here is still the 2.5" SSD. The drop in performance by moving to the x4 slot pretty much rules out ever placing it there. The mSATA drive performs much better in the x16 slot, but still not well enough to justify placing it there permanently.

Still, many older generation Mac Pro owners might not think to place their solid states on the PCIe bus. Afterall, the Mac Pro has dedicated SATA bays for up to 4 drives. This is probably the first place most people would think to locate their hard drives. Let's see what happens if we locate the 2.5" drive in one of the bays; for comparison, I'll also clock a mechanical disk with one of the same SATA connections:

While the 2.5" SSD does better in the hard-drive bay than it does in the x4 PCIe slot, this is still a roughly 50% reduction in buffered read speed compared to the x16 slot. The performance is still 2 times that of a traditional hard-drive, though.

x16 for the Win?

The results aren't too much of a surprise--x16 lanes are built for throughput. The change in startup speed once I placed the 2.5" SSD there was enough that I could feel substantial difference. It's certainly nice to be able to put a number on the change in performance, though. It's also nice to see that the numbers I was getting were pretty well in line with the manufacturers claims.

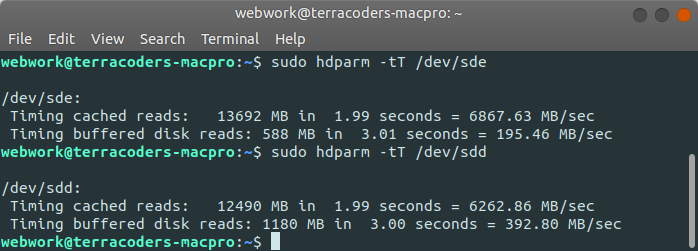

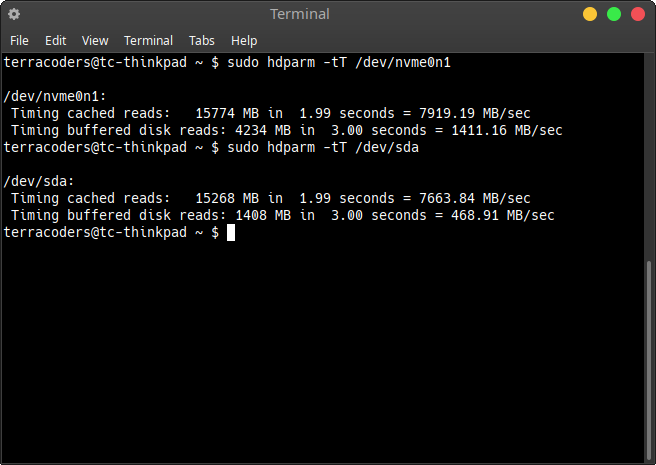

One of the reasons I like to play with my Mac Pro, though, is to see how it measures against more modern computers. So, to really hit the analysis home, I owe it to myself to do one last test. Let's look at the speed on my E570 Think-Pad. My Think-Pad boots on an Nvme SSD, but I keep a second 2.5" SSD in the SATA bay. That 2.5" disk is basically the same model as what I use on the Mac Pro: an OWC Mercury Electra 6G (although, 120G instead of 1T). It'll be interesting to see how they compare:

That first test is the Nvme solid-state. 1411.16 MB/sec buffered read speed is almost three times the speed of my best performing configuration on the Mac Pro! The second test is, of course, the 2.5" SSD via SATA. While this is considerably better performance than the SATA connection on the Mac Pro, the Mac Pro beats this number if you use the x16 PCIe lane. That has to count for something. A respectable computer in 2021 has support for Nvme drives, but let's not forget that my Mac Pro is 13 years old. It still carries it's weight next to many lower end modern computers. My kids have low end Lenovo's without Nvme's; at a similar price point, though, the 2008 Mac Pro has infinitely better processing power, and can deliver a comparable disk read speed. This sometimes blows me away, and is perhaps one of the rare occasions I can give credit to Apple for being a true powerhouse in the computing world: the idea that they built a computer in 2008 that's still competitive after 13 years is no small feat. Yes, it probably cost $3000 back in the day, but that comes out to about $230/year over that time-frame. I'll wager there are people who have spent the same amount, or more, upgrading to new computers over the same 13 years just to keep up with the march of technology.